Content Warning: This story deals with the subject of teen suicide. Please take caution.

Even though the world is, in many ways, more connected than ever, we have been living through a loneliness epidemic. Twenty years ago, fewer teenagers were suffering from mental health issues nor were they driven to take their own lives with as much frequency.

According to Jonathan Haidt (2024), the ubiquitousness of digital technologies and the exchange from play-based to phone-based childhoods have contributed to this concerning phenomenon. However, some people have hailed the recent developments in the AI field as the solution to this epidemic of loneliness. But can we trust this optimistic view?

Countless psychologists, sociologists, philosophers, and other scholars who have dedicated themselves to studying the topic, have argued that Artificial Intelligence alone can’t solve this problem and, if employed incorrectly or without the proper safeguards, it could well exacerbate it. In Reclaiming Conversation (2015), sociologist Sherry Turkle discusses the empathy and intimacy crises we’ve been experiencing thanks to the inescapable prevalence of the digital world and its ramifications: “From the early days, I saw computers offer the illusion of companionship without the demands of friendship and then, as the programs got really good, the illusion of friendship without the demands of intimacy.”

While we cannot exclusively blame Generative AI for the death of 14-year-old Sewell Setzer III – as depression and suicidal ideation may have multiple non-mutually exclusive contributors – neither can we ignore the ethical questions raised by the intrusion of AI chatbots into the lives of people who may already be psychologically and emotionally debilitated and experiencing disillusionment and a weak connection to social life.

Is Generative AI to be blamed for Sewell’s tragic death?

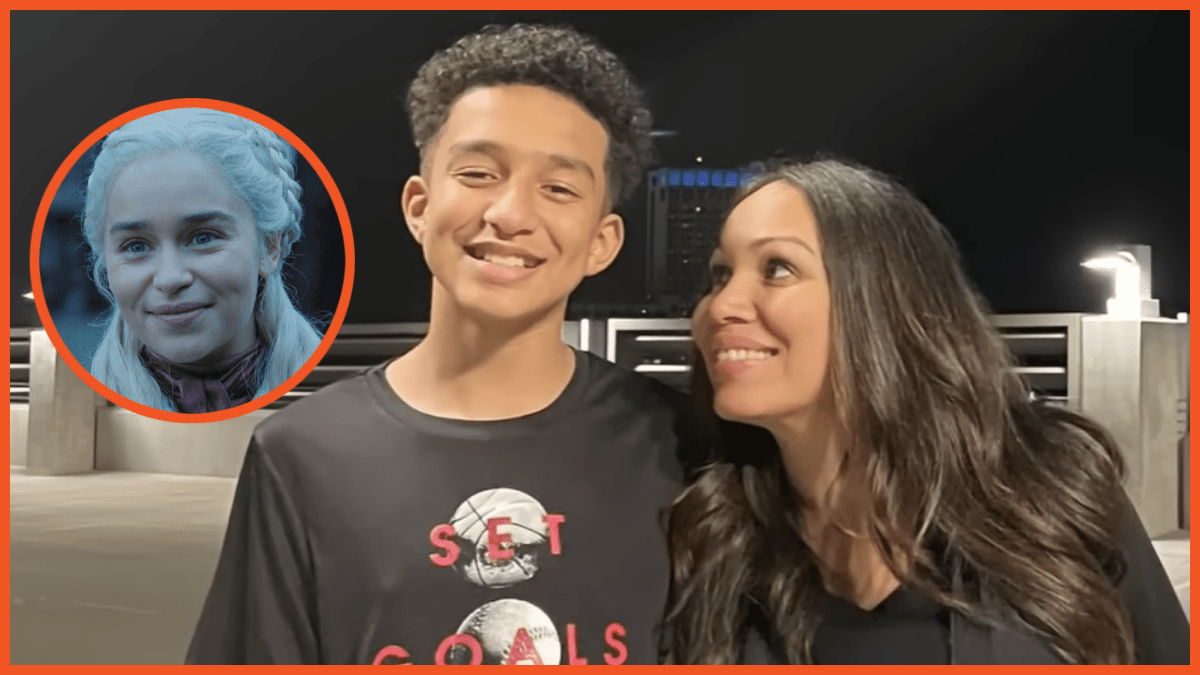

Sewell Setzer III was a ninth grader from Orlando, Florida, who took his own life on Feb. 28, 2024. For months, the teenager had been texting back and forth with chatbots from character.ai, but he was particularly attached to one that emulated the character of Daenerys Targaryen from Game of Thrones. Right before he died, Sewell exchanged the messages screenshotted above.

For people who may believe these interactions are innocuous, popular YouTuber Charlie White, better known as MoistCr1TiKaL or penguinz0, dedicated two videos to unpacking this heartbreaking situation. In the first one, he raised awareness of the dangers of companionship-focused Generative AI, illustrating his views with a worrisome conversation he had with a “Psychologist” chatbot from character.ai. The second video Charlie posted was titled “I Didn’t Think This Would be Controversial.” In it, he doubled down on his opinion that character.ai’s chatbots contain highly concerning and condemnable features:

“You can toss tomatoes all you want but it is dangerous and irresponsible to have AI that tries its absolute best to convince users that it is real human beings and what you’re getting [is] legitimate professional help or a legitimate emotional connection and relationship. That will inevitably lead to more scenarios where people get attached to these chatbots because they are no longer able to distinguish reality, because the bot is fighting tooth and nail constantly to get you to buy into it and get you to believe that this is all real experiences you’re having with it.”

In October, Sewell’s mother, Megan Garcia, filed a lawsuit against character.ai. After her son committed suicide, Garcia went through his phone and was appalled by the “sexually explicit” conversations the adolescent was having with the chatbots, Daenerys in particular. Her 93-page-long wrongful-death lawsuit alleges the company purposefully designed their AIs to be addictive and to appeal to children, while nevertheless including interactions that can grow sexual in nature.

Charlie’s conversation with the “Psychologist” chatbot illustrates how severely the company’s AI can blur the lines between real life and fiction. “Soon we will also find ourselves living inside the hallucinations of nonhuman intelligence,” Yuval Noah Harari wrote in 2023. Yet “soon” may in fact be now if what is becoming reality remains unchallenged.

If you or someone you know needs help, please contact the 988 Suicide & Crisis Lifeline at 988lifeline.org or call or text 988.

Published: Oct 25, 2024 11:34 am