What began as a seemingly harmless feature to celebrate users’ 2024 reading achievements on Fable has evolved into a controversy that threatens the platform’s reputation and raises questions about AI implementation in book-focused spaces.

Fable, a social reading platform founded in 2021 by former Cisco CTO Padmasree Warrior, has built its community around connecting book lovers through shared reading experiences. The app, which boasts millions of users, allows readers to join book clubs, track reading progress, and discover new books through curated lists called “folios.” Its mission to make reading more social and accessible has attracted both casual readers and dedicated bibliophiles seeking a digital space to discuss literature.

The controversy erupted when users began sharing screenshots of their AI-generated year-end summaries that contained inappropriate racial commentary. One particularly problematic summary told a reader, who primarily enjoyed Black authors, to “surface for the occasional white author,” while another questioned if a reader focused on diverse voices was “ever in the mood for a straight, cis white man’s perspective.” These summaries, intended to be playful roasts of users’ reading habits, instead revealed concerning biases within the company’s AI system.

Fable’s initial response acknowledged the issue, with the company stating that such summaries “should never, ever, include references to race, sexuality, religion, nationality, or other protected classes.” However, their subsequent explanation on Instagram only fueled further criticism when they admitted to not understanding why their AI model displays such biases against marginalized groups.

Why Fable’s AI controversy represents a deeper problem in tech

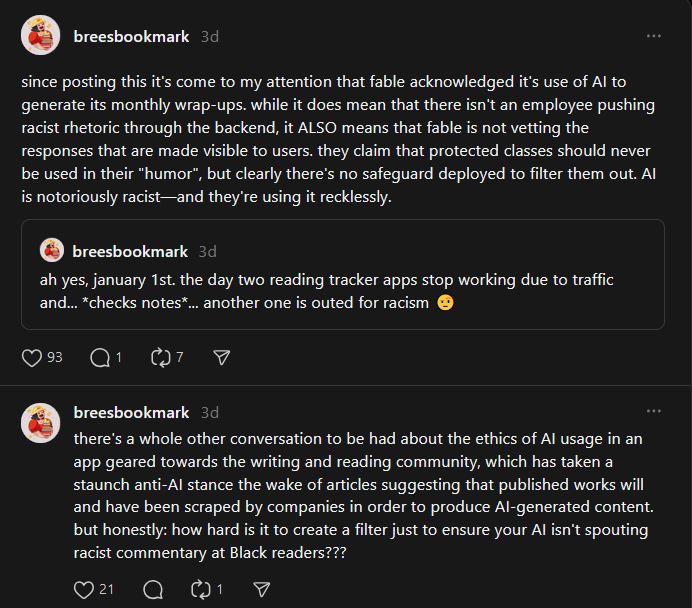

The Fable incident led to an exodus of the platform and has sparked intense debate within the literary community about the ethics of AI usage in reading spaces. As one user pointed out on social media, “There’s a whole other conversation to be had about the ethics of AI usage in an app geared towards the writing and reading community, which has taken a staunch anti-AI stance in the wake of articles suggesting that published works will and have been scraped by companies in order to produce AI-generated content.”

The controversy becomes particularly troubling when considering Fable’s position in the literary ecosystem. As a platform dedicated to books and reading, their decision to implement AI technology without proper safeguards seems incredibly tone-deaf. The literary community has been notably vocal about concerns regarding AI’s impact on creative industries, from the unauthorized use of books for AI training to the generation of AI-written content competing with human authors.

Furthermore, Fable’s handling of the situation has raised questions about corporate responsibility in AI implementation. The company’s failure to vet AI-generated responses before making them visible to users demonstrates a concerning lack of oversight. As one user noted, “AI is notoriously racist—and they’re using it recklessly… how hard is it to create a filter just to ensure your AI isn’t spouting racist commentary at Black readers?”

The fallout has led many users to migrate to competitor platforms like StoryGraph, which has maintained a more transparent approach to AI usage and data handling. While StoryGraph also employs AI features, they’ve emphasized keeping user data in-house and maintaining strict control over their AI implementation, including clear communication about how and where AI is used within their platform.

Implementing AI in user-facing features requires more than technical capability. It demands rigorous ethical consideration, thorough testing, and robust human oversight to prevent the perpetuation of harmful biases. While AI can efficiently process data for millions of users, this efficiency shouldn’t come at the cost of alienating marginalized communities.

Published: Jan 5, 2025 11:26 am